Openstack Neutron网络实验-01

本文将介绍在Openstack创建网络和启动实例过程中其网络拓扑的变化,用于了解Neutron的网络拓扑和机制。

0. 准备

参考《使用vagrant和virtualbox搭建openstack集群》安装一台单机Openstack,先不要增加额外的计算节点,这样所有的网络拓扑变化均可直接在Allinone节点查看。

另外需要准备一个镜像,用于创建openstack实例供实验使用,在packstack的配置参数中,提供了一个demo镜像的下载地址,但是上述文章中没有安装demo,因此需要手动下载该镜像,并在Openstack Dashboard中上传。

$ grep CONFIG_PROVISION_IMAGE allinone

CONFIG_PROVISION_IMAGE_NAME=cirros

CONFIG_PROVISION_IMAGE_URL=http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img

CONFIG_PROVISION_IMAGE_FORMAT=qcow2

CONFIG_PROVISION_IMAGE_PROPERTIES=

CONFIG_PROVISION_IMAGE_SSH_USER=cirros

上传镜像的菜单路径为:Project -> Compute -> Images -> Create Image。

Packstack安装默认的底层网络驱动是OVSSwitch,而租户网络类型为vxlan。在一步一步创建网络,启动实例的过程中,可以使用以下命令在网络节点(即Allinone节点os-ctl1)上查看每个操作之后网络拓扑的变化情况:

- ovs-vsctl show, 查看ovs网桥

- brctl show,查看linux网桥

- ip netns,查看网络命名空间

- ip address,查看root命名空间内的设备和地址

- ip netns exec <namespace> ip address,查看指定命名空间内的设备和地址

1. 从无到有

初始化完装完Openstack后,在网络节点即os-ctl1中运行上述命令:

# ovs-vsctl show

ef2ce1ab-4571-4e55-84b8-50163f9c0e0a

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port br-ex

Interface br-ex

type: internal

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port br-int

Interface br-int

type: internal

ovs_version: "2.9.0"

# brctl show

bridge name bridge id STP enabled interfaces

# ip netns

# ip address | grep ^[0-9]

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

4: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

5: br-ex: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

6: br-int: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

7: br-tun: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

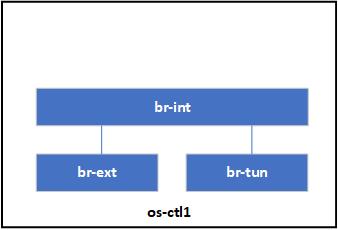

上述结果显示,Neutron默认在网络节点上创建了3个OVS网桥,分别为br-tun,br-ex,br-int,其中br-int分别和br-tun,br-ex互连,拓扑图如下:

2. 创建租户私有网络

在Openstack Dashborad上创建一个租户网络,菜单路径为Project -> Network -> Networks -> Create Network。填入如下参数:

- Network Name:test-net

- Subnet Name:test-net-subnet

- Network Address:172.16.2.0/24

- Gateway IP:172.16.2.1

- Enable DHCP: true

创建完毕后,网络节点拓扑变化如下:

# ovs-vsctl show

...

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port br-int

Interface br-int

type: internal

Port "tape68eed07-f5"

tag: 2

Interface "tape68eed07-f5"

type: internal

...

# ip netns

qdhcp-d18f42ff-a688-4c57-acde-6299326022dc

# ip netns exec qdhcp-d18f42ff-a688-4c57-acde-6299326022dc ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

9: tape68eed07-f5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN qlen 1000

link/ether fa:16:3e:89:e5:fa brd ff:ff:ff:ff:ff:ff

inet 172.16.2.2/24 brd 172.16.2.255 scope global tape68eed07-f5

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe89:e5fa/64 scope link

valid_lft forever preferred_lft forever

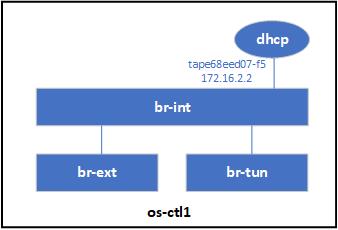

上述结果显示,由于在创建subnet的时候勾选了启用DHCP,因此Neutron为DHCP服务创建了一个命名空间,并在其中创建一个TAP设备tape68eed07-f5作为DHCP的网口,该网口被加入到br-int网桥中,该DHCP服务的地址为172.16.2.2。拓扑图如下:

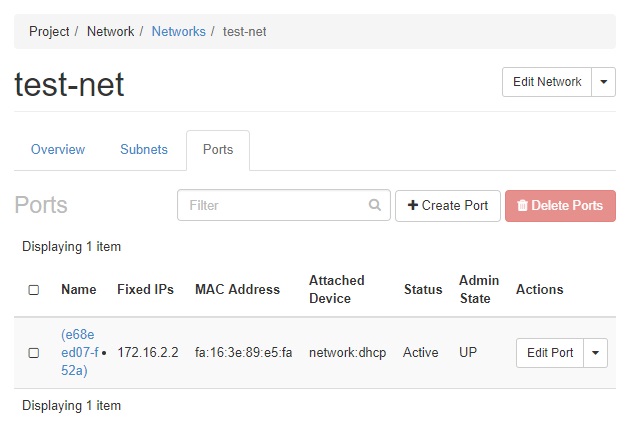

同时,在dashboard中,可以看到新创建的network自带了一个port,这个port即为dhcp接入的端口。

3. 启动实例并将其接入网络

在Openstack Dashborad上启动一个nova实例,菜单路径为Project -> Compute -> Instances -> Launch Instance。填入如下参数:

- Instance Name: test-vm1

- Source中选择之前上传的镜像,由于是实验环境,可将“Delete Volume on Instance Delete”设为Yes,避免浪费空间。

- Flavor: m1.tiny

- Networks: 上面创建的test-net

实例启动成功后,网络节点拓扑变化如下:

# ovs-vsctl show

...

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "qvo5d07f509-f1"

tag: 2

Interface "qvo5d07f509-f1"

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port br-int

Interface br-int

type: internal

Port "tape68eed07-f5"

tag: 2

Interface "tape68eed07-f5"

type: internal

...

# brctl show

bridge name bridge id STP enabled interfaces

qbr5d07f509-f1 8000.2eeb31a8ff84 no qvb5d07f509-f1

tap5d07f509-f1

# ip address | grep ^[0-9]

...

10: qbr5d07f509-f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP qlen 1000

11: qvo5d07f509-f1@qvb5d07f509-f1: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1450 qdisc noqueue master ovs-system state UP qlen 1000

12: qvb5d07f509-f1@qvo5d07f509-f1: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1450 qdisc noqueue master qbr5d07f509-f1 state UP qlen 1000

13: tap5d07f509-f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast master qbr5d07f509-f1 state UNKNOWN qlen 1000

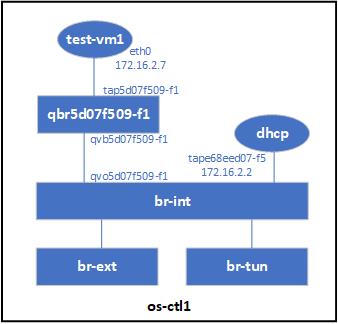

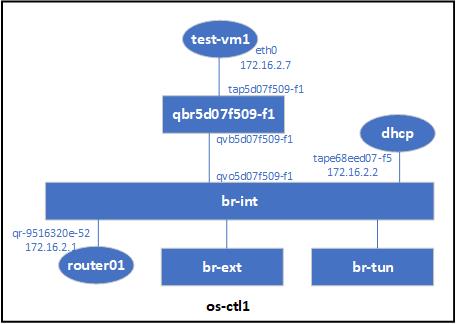

上述结果显示,Neutron为新实例创建了4个设备,其中一个是Linux网桥qbr5d07f509-f1,该网桥接入了两个设备:

- qvb5d07f509-f1,这个设备和qvo5d07f509-f1是一对VETH设备,而qvo5d07f509-f1被加入到了OVS网桥br-int中,因此该设备用于新建的Linux网桥和br-int的连接。

- tap5d07f509-f1,这个TAP设备就是供新实例接入网络的网口。

拓扑图如下:

进到创建的实例中查看网络配置:

# sudo virsh list

Id Name State

----------------------------------------------------

1 instance-00000001 running

# virsh console 1

Connected to domain instance-00000001

Escape character is ^]

login as 'cirros' user. default password: 'cubswin:)'. use 'sudo' for root.

cirros login: cirros

Password:

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast qlen 1000

link/ether fa:16:3e:85:a9:84 brd ff:ff:ff:ff:ff:ff

inet 172.16.2.7/24 brd 172.16.2.255 scope global eth0

inet6 fe80::f816:3eff:fe85:a984/64 scope link

valid_lft forever preferred_lft forever

$ ping 172.16.2.2

PING 172.16.2.2 (172.16.2.2): 56 data bytes

64 bytes from 172.16.2.2: seq=0 ttl=64 time=3.599 ms

--- 172.16.2.2 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 3.599/3.599/3.599 ms

$ ping 172.16.2.1

PING 172.16.2.1 (172.16.2.1): 56 data bytes

--- 172.16.2.1 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

可以看到,DHCP为该实例分配了地址172.16.2.7,并且可以ping通DHCP服务器172.16.2.2,但是无法ping通网关172.16.2.1,这是因为目前的实验仅仅局限在子网内部,网关设备还没有创建。

4. 创建Router为网络互通做准备

在Openstack Dashborad上创建Router,菜单路径为Project -> Network -> Routers -> Create Router。填入如下参数:

- Router Name: router01

- 创建完毕后,进入router为其添加Interface, 将目前仅有的test-net-subnet加入到router中

添加Interface后,根据Interface所在子网,Neutron自动为其分配IP 172.16.2.1,并且这个子网内的实例test-vm1可以通过该ip访问router,这个router即为这个子网的网关。

此时,网络节点拓扑变化如下:

# ovs-vsctl show

...

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "qvo5d07f509-f1"

tag: 2

Interface "qvo5d07f509-f1"

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port br-int

Interface br-int

type: internal

Port "tape68eed07-f5"

tag: 2

Interface "tape68eed07-f5"

type: internal

Port "qr-9516320e-52"

tag: 2

Interface "qr-9516320e-52"

type: internal

...

# ip netns

qrouter-7d360e8e-e392-4def-816b-f92f7e5f9bc5

qdhcp-d18f42ff-a688-4c57-acde-6299326022dc

# sudo ip netns exec qrouter-7d360e8e-e392-4def-816b-f92f7e5f9bc5 ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

14: qr-9516320e-52: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN qlen 1000

link/ether fa:16:3e:f6:ec:e8 brd ff:ff:ff:ff:ff:ff

inet 172.16.2.1/24 brd 172.16.2.255 scope global qr-9516320e-52

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fef6:ece8/64 scope link

valid_lft forever preferred_lft forever

上述结果显示,Neutron为router01创建了一个新的命名空间,并在其中创建了一个TAP设备qr-9516320e-52,该设备被加入到br-int网桥中,地址为172.16.2.1。拓扑图如下:

重新进入虚机,发现可以ping通网关172.16.2.1了。

# virsh console 1

Connected to domain instance-00000001

Escape character is ^]

login as 'cirros' user. default password: 'cubswin:)'. use 'sudo' for root.

cirros login: cirros

Password:

$ ping 172.16.2.1

PING 172.16.2.1 (172.16.2.1): 56 data bytes

64 bytes from 172.16.2.1: seq=0 ttl=64 time=4.134 ms

64 bytes from 172.16.2.1: seq=1 ttl=64 time=0.695 ms

--- 172.16.2.1 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.695/2.414/4.134 ms

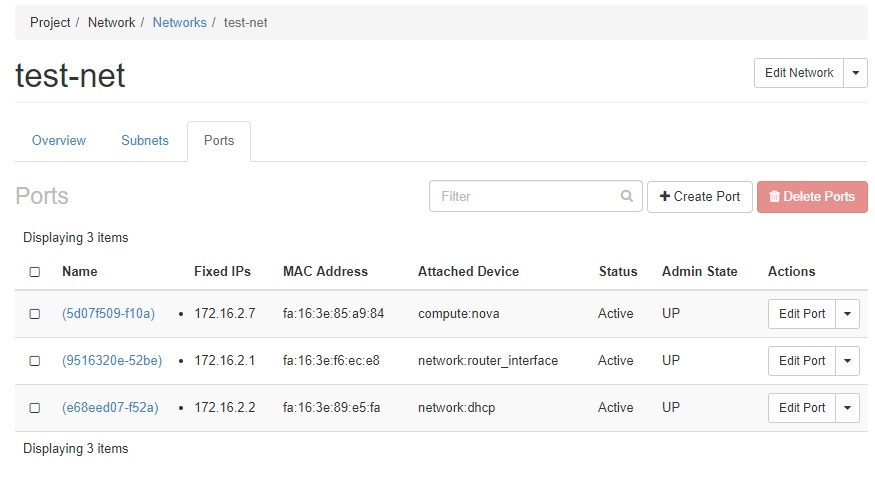

最后,再回到创建的test-net网络中查看其详细信息,发现此时已经有了3个port,分别接入了dhcp,router01,test-vm1。

问题:

- 此时br-int无法从dhcp服务器上获取到ip地址,手动配置一个同网段的地址也无法ping通网关,为什么?

- 什么时候创建TAP设备,什么时候创建VETH设备,两者区别是什么?